Claire still remembers the moment learning stopped feeling like learning.

The first time a grading rubric was attached to her writing, she said, “all the joy was taken away.” What had once been an act of exploration became an exercise in compliance. She wasn’t writing to understand anymore—she was writing to score.

“I remember the first time that a grading rubric was attached to a piece of my writing…. Suddenly all the joy was taken away. I was writing for a grade. I was no longer exploring for me. I want to get that back. Will I ever get that back?” (Olson, 2006)

For many educators, Claire’s experience is painfully familiar. Traditional grading systems, while efficient, often reduce complex thinking into a single number or letter. They tell us what a student achieved, but very little about how they arrived there—or what they need next.

This is precisely the gap that formative assessment was designed to fill.

From Judgment to Growth: Why Formative Assessment Matters More Than Ever

Unlike summative assessments, formative assessment shifts the focus from evaluation to development. It treats learning as an ongoing process—one where feedback, dialogue, and reflection play a central role.

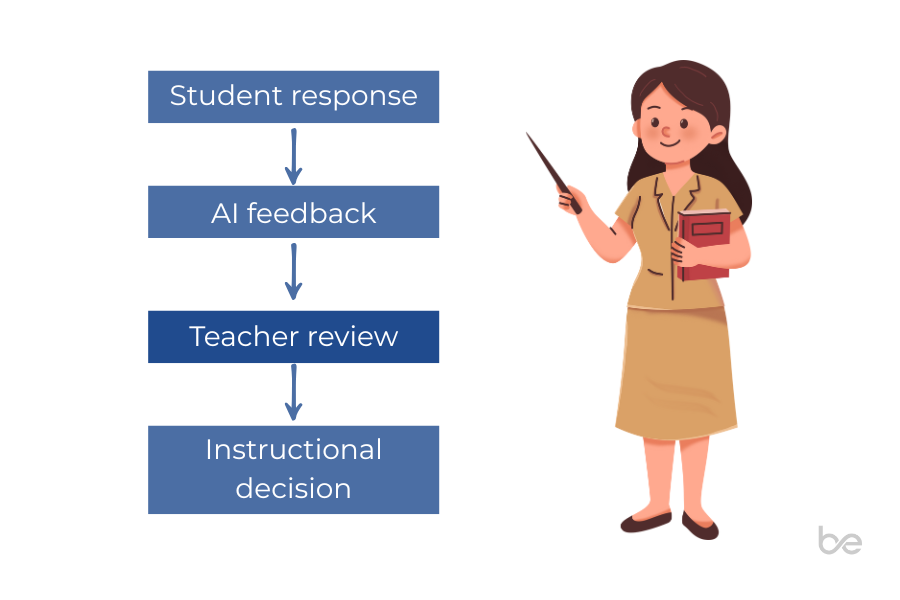

Educational researchers describe formative assessment as a daily practice of gathering evidence, responding to it, and adjusting instruction in real time. In practice, this means asking better questions, observing student thinking, and offering guidance that helps learners move forward—not just rank higher.

Yet in many classrooms, formative assessment struggles to scale. Large class sizes, time constraints, and administrative demands make it difficult for teachers to give every student the kind of timely, individualized feedback that deep learning requires.

This is where conversations about AI often enter the room.

| Summative Assessment | Formative Assessment |

|---|---|

| Final scores | Ongoing feedback |

| Judgment-focused | Growth-focused |

| One-time snapshot | Continuous insight |

AI Doesn’t Understand but It Can Support Understanding

Generative AI tools, particularly large language models, are now capable of generating feedback, adapting questions, and responding instantly to student input. Used thoughtfully, they can help surface misconceptions, prompt reflection, and personalize learning pathways.

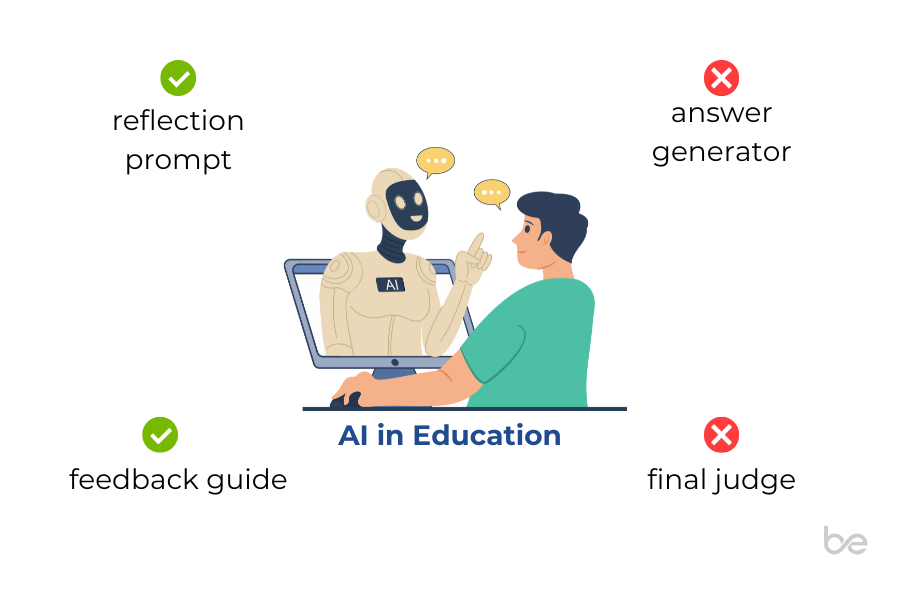

But it’s important to be clear about what AI is—and what it is not.

AI does not “understand” student thinking in the human sense. It predicts responses based on patterns in data. This limitation explains why AI can sometimes produce answers that sound confident but are incorrect, biased, or shallow. It also explains why over-reliance on AI—especially as an answer machine—can undermine critical thinking.

The real educational value of AI emerges not when it gives answers, but when it supports the process of thinking.

Research-backed systems that use AI for formative assessment are intentionally designed to withhold final answers, instead offering prompts, hints, and reflective questions. These systems encourage students to articulate reasoning, revisit ideas, and refine understanding—key ingredients for long-term learning.

What Effective AI-supported Formative Assessment Looks Like

Well-designed AI-supported formative assessment shares a few common principles:

1. Feedback without shortcuts

Studies show that when learners are given correct answers too early, learning becomes superficial. AI systems are most effective when they guide students toward insight rather than delivering it outright.

2. Adaptive, not generic, responses

AI can adjust feedback based on where a learner is struggling—asking follow-up questions, flagging gaps, or suggesting alternate approaches—while still operating within boundaries set by educators.

3. Process over product

Assessment tasks that require explanation, reflection, or revision make it harder to outsource thinking. Oral explanations, draft submissions, and learning logs all shift attention from the final output to the learning journey itself.

4. Teacher-in-the-loop by design

AI should never be the final authority. Educators remain responsible for interpreting insights, making high-stakes decisions, and recognizing creative or unconventional thinking that algorithms may miss.

READ: Reimagining Oral Assessment: Proven Frameworks for Better Learning with better-ed

Addressing the Real Risks: Bias, Hallucinations, and Over-reliance

Skepticism toward AI in education is not misplaced. Research highlights three persistent risks:

- bias, inherited from training data that may not represent all learners fairly

- hallucinations, where AI produces plausible but false information

- over-reliance, which can weaken independent reasoning and academic integrity

The solution is not avoidance—but intentional design and literacy.

Students need to be taught how AI works, why it can be wrong, and how to critically evaluate its outputs. Educators need tools that are transparent, auditable, and aligned with pedagogical goals—not black boxes that quietly shape learning.

When AI is framed as a thinking partner rather than an answer key, it can actually strengthen critical thinking rather than erode it.

Why Voice and Conversation Matter in the Age of GenAI

One emerging insight from classrooms experimenting with AI-supported assessment is this: how students explain matters as much as what they submit.

Written work is increasingly vulnerable to copy-paste and surface-level paraphrasing. Spoken explanations—where students articulate reasoning, respond to follow-up questions, and clarify ideas in real time—are harder to fake and more revealing of true understanding.

Conversational assessment, especially when supported by AI, opens up new possibilities for formative feedback: probing questions, immediate clarification, and insights into how students think—not just what they produce.

EXPLORE: Scaling better-ed in Europe: Insights from Our Pilot with Kulosaari Secondary School

AI Won’t Replace Teachers—and That’s the Point

Perhaps the most persistent fear surrounding AI in education is that it will make teachers obsolete. Research suggests the opposite.

AI can handle repetitive tasks, surface patterns in learning data, and offer immediate feedback at scale. What it cannot do is build trust, recognize emotional cues, mentor learners, or decide when a student needs encouragement rather than correction.

The most promising future is not AI instead of teachers, but AI in service of teachers—freeing them to focus on what humans do best: guiding, inspiring, and caring.

In this model, educators are not passive users of technology. They are designers of learning experiences, stewards of ethics, and interpreters of insight.

LEARN MORE: PSHS Main Campus Pilots AI-Powered Oral Assessments with better-ed

Moving Forward: Assessment That Supports Real Learning

The question is no longer whether AI will appear in classrooms—it already has. The real question is how we choose to use it.

When aligned with formative assessment principles, AI can help restore what grading alone often strips away: curiosity, reflection, and meaningful feedback. When grounded in teacher expertise and ethical guardrails, it becomes a tool for clarity rather than control.

At better-ed, this philosophy shapes how we think about assessment: conversations over checklists, insight over scores, and technology that supports—not substitutes—the human work of teaching.

Because in the end, learning that truly sticks is learning that is understood, reflected on, and spoken—not just graded.

READ: The New Face of Learning: How AI in Education Is Empowering Students and Teachers in 2025